Stephen Hawking on the Advent of ‘the Meta-Human’, II. --

‘‘‘AI Android

Robotics’’’.

Dear Reader,

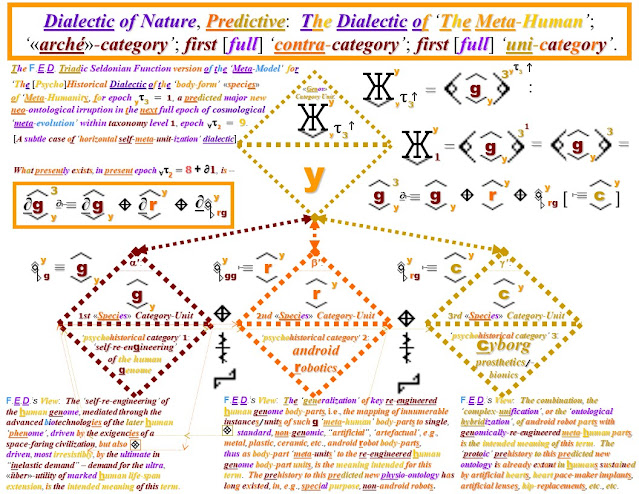

We have predicted, as the ‘neo-ontology’ of the next epoch of cosmological ‘meta-evolution’ -- at least locally, in our solar system -- an ‘ontologically-dynamical, neo-ontological meta-finite singularity’ that we call ‘the irruption of the meta-human’ -- the advent of a new «genos» of three new «species» of cosmological ontology that we call ‘meta-humanity’ --

qh =

h =

h1, ---> h2 =

h + Deltah =

h + qhh |-= h + y .

h + qhh |-= h + y .

We hold that none of the three, dialectically-interrelated

«species» of this new «genos» of ‘meta-humanity’ that we have predicted is as yet extant in the contemporary Terran ecosphere of [mere] humanity.

We also hold that ‘protoic preformations’ of each of these three «species» are already extant in our times, largely, though

not completely, unnoticed as such by most of our contemporaries.

Given this background, it is interesting to read the

thoughts of the late physicist, Stephen Hawking, on the systematically second of these three «species,

that of ‘‘‘AI Android Robotics’’’,

in his posthumously published, 2018 book Brief Answers to the

Big Questions [Bantam, NY] --

[pp. 184-189]: “... If computers continue to obey

Moore’s Law, doubling their speed and memory capacity every eighteen months,

the result is that computers are likely to overtake humans in intelligence at

some point in the next hundred years.”

“When an artificial intelligence (AI) becomes better than

humans at AI design, so that it can recursively improve itself without human

help, we may face an intelligence explosion that ultimately results in machines

whose intelligence exceeds ours by more than ours exceeds that of snails.”

“When that happens, we will need to insure that the

computers have goals aligned with ours.”

“It’s tempting to dismiss the notion of highly intelligent

machines as mere science fiction, but this would be a mistake, and potentially

our worst mistake ever.”

“...There is now a broad consensus that AI research is

progressing steadily and that its impact on society is likely to increase. The potential benefits are huge... . Because of the great potential of AI, it is

important to research how to reap its benefits while avoiding potential

pitfalls.”

“Success in creating AI would be the biggest event in human

history.”

“Unfortunately, it might also be the last, unless we learn

how to avoid the risks. Used as a

toolkit, AI can augment our existing intelligence to open up advances in every

area of science and society. However, it

will also bring dangers. While primitive

forms of artificial intelligence developed so far have proved very useful, I

fear the consequences of creating something that can match or surpass

humans. The concern is that AI would

take off on its own and redesign itself at an ever increasing rate. Humans, who are limited by slow biological

evolution [F.E.D.: Not

if they take up ‘human-genome self-re-engineering’, as noted elsewhere in this

book by Hawking himself, although such would simply “supersede” contemporary

genomic humanity in a different way.], couldn’t compete and would be

superseded. And in the future AI could

develop a will of its own, a will that is in conflict with ours.”

“Others believe that humans can command the rate of [F.E.D.: development of] technology for a

decently long time, and that the potential of AI to solve many of the world’s

problems will be realised.”

“Although I am well known as an optimist regarding the human

race, I am not so sure.”

“... Little serious research has been devoted to these

issues outside a few small non-profit institutes.”

“Fortunately, this is now changing. Technology pioneers Bill Gates, Steve Wozniak

and Elon Musk have echoed my concerns, and a healthy culture of risk assessment

and awareness of societal implications is beginning to take root in the AI

community.”

“In January 2015, I, along with Elon Musk and many AI

experts, signed an open letter on artificial intelligence, calling for serious

research into its impact on society. In

the past, Elon Musk has warned that superhuman artificial intelligence is

capable of providing incalculable benefits, but if deployed incautiously will

have an adverse effect on the human race. ...”

[pp. 193]: “Why

are we so worried about artificial intelligence? Surely humans are always able to pull the

plug?”

“People asked a computer, “Is there a God?” And the computer

said, “There is now,” and fused the plug.”

FYI: Much of the work

of Karl Seldon, and of his collaborators, including work by “yours truly”, is

available, for your

free-of-charge download, via --

Regards,

Miguel Detonacciones,

Member, Foundation Encyclopedia Dialectica

[F.E.D.],

Officer, F.E.D.

Office of Public Liaison

No comments:

Post a Comment